PATTERNS OF INFERENCE IN BAYES NETWORK

- There are mainly three types of inference in Bayes network.

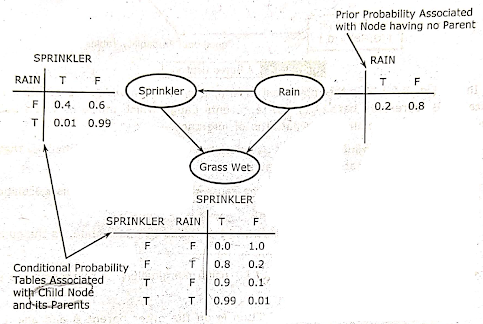

- Inorder to explain the patterns of inference let us consider an example Bayes network. The network is shown in the Fig. 3.5.1.

Fig 3.5.1: A Bayes Network

- In the above Figure. 3.5.1 M is the movement of arm, L is the term, which represents a block is liftable, B represents battery and G represents gauge which indicates when battery if full. Now we explain the three patterns of inference.

Causal or Top-down Inference:

Let us calculate P(M | L) that is the probability that the arm moves given that black is liftable.

- The arm can only be moved by the cause the block is liftable this calculation is an example of causal reasoning.

- P(M | L), L is called the evidence and M is called as query node, as the question is about the probability of M.

- Next chain rule is used. to condition M on the Other parent B and also L.

- P(M | L) = P(M | B, L) P(B | L) + P(M | ᆨB, L) P(ᆨB | L). As B has no parents

- P(B | L)= P(B), in the same way P(ᆨB | L) = P(ᆨB).

- ⇒ P(M | L) = P(M | B, L) P(B) + P(M | ᆨB, L) P(ᆨB)

from the above Figure, 3.5.1 these values are substituted,

- P(M | L) = 0.9 X 0.5 + 0 X 0.5 = 0.45

- ∴ P(M | L) = 0.45

The operations that are performed are.

- Rewriting the given condition probability of the query node Q in the form of joint probability of Q if evidence is given and the parents which are not evidence given the evidence.

- This point probability is expressed in the form of probability of Q which is conditioned on all of its parents.

Diagnostic or Bottom-up Inference

- Let us calculate that the block cannot be lifted if it is given that the arm can't move. i.e. P(ᆨL | ᆨM).

- Here an effect is used to infer a cause, so this is called diagnostic reasoning.

Now this can be converted into casual relationship like P(ᆨM | ᆨL) and the value can be used to find the P(ᆨL | ᆨM) as 0.63934.

Explaining Away

- If there is another evidence say ᆨB that the battery is not charged.

- Then this evidence explains, ᆨM, making ᆨL less certain.

- This inference uses bottom up reasoning in which top-down reasoning may be used.

- According to Bayes rule,

From the definition of conditional probability it implies.

As B has no parents P(ᆨB | ᆨL) =P(ᆨB)

From the above Figure. 3.5.1 the values can be taken and P(ᆨB, ᆨM) can be calculated as follows,